The JAX Variable Latency Delay is a special realtime effect, that is based on an audio research project with the goal of modifying the playback speed with a sample-rate-constant stream. With or without optional pitch compensation. (It was always a dream of us, to create this kind of time machine.)

Streaming audio usually is a continuous process with a fixed sample rate, which makes it quite difficult to change the speed oft the playback without getting certain problems and discontinuity. Slowing down or speeding up the stream would result in inconsistencies of the audio flow, breaking all the rules. The time cannot be modified in nature.

So we did sit down and switched our brains on, to find a way to overcome these fixed paradigm somehow and came up with a certain musically useful technique in realtime audio processing. ( Note: We do not talk about any kind of offline processing here, where this can be reached rather with ease. )

We wanted to use Apples Audio Unit API to realise this and everyone knows, that an audio unit cannot change the sample rate dynamically, nor is it even possible to request a certain sample rate change from the host or to change the sample rate on the fly ( dynamically ) by the host itself with any Apple device.

( Apples realtime audio system very much suffers from the fact, that it is fixed to a certain driver dependent specific sample rate. This is an issue by design and it causes allot of the current fundamental problems on all latest Apple devices, including many of existing audio units, that actually do processing with wrong sample rates, for instance. Mostly this is, because many (host and plugin) developers just do not understand how Apples audio system is supposed to work and will maximise these problems additionally. It seems that Apple sometimes does not even understand this audio system themselves anymore. We think, this is a misconceptual issue. )

However, our approach will be a delay based mechanism, which of course has some limitations too, but actually is able to slow down and speed up continuous audio streams in realtime to a certain amount and with certain rules applied to this process.

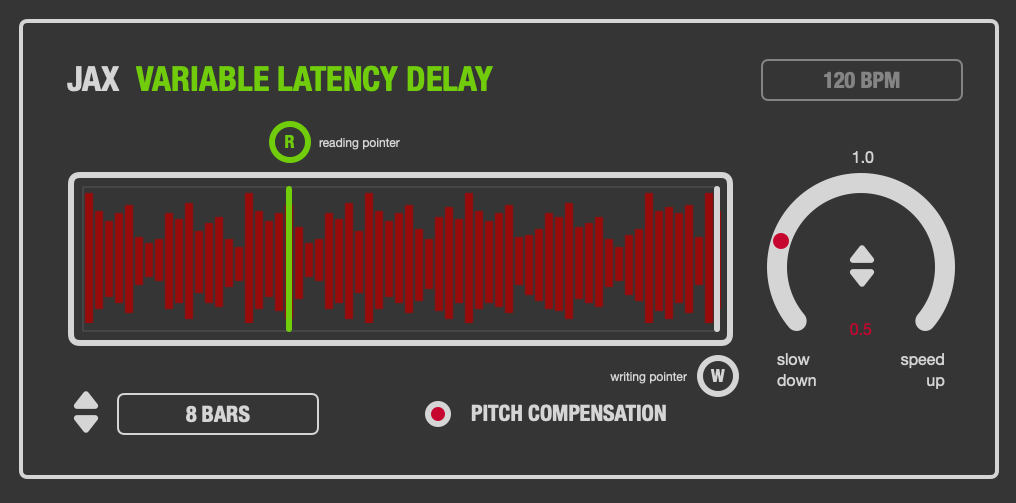

The central function mechanism is reached by a delay (latency) buffer with definable length and variable speed circular reading and constant writing pointers into that buffer. The size of the delay buffer can be adjusted (none realtime) to the tempo of the host with a certain size, so the resulting effect becomes controllable.

The writing pointer to this audio buffer will be always constant in speed, as this is a requirement to keep continuity of the constant audio stream. But the reading pointer now actually can for instance become slower, giving the impression of a temporary speed reduction in a certain timeframe.

If the reading pointer is anywhere behind the writing pointer, it can be speed up to the maximum position of the writing pointer again, speeding up again. The reading pointer never must exceed the writing pointer, of course and the reading pointer also never should become too slow or stuck or into any other overrun, which would destroy audio flow.

The principle resolves very much to the fact, that at first, the audio must be slowed down, before it can be speeded up again. The size of the buffer therefore very much affects the sonic result (time dependency) of this effect. It should be set to musically useful sizes, probably 4 or 8 musical bars, dependent of the hosts tempo and division and dependent of the desired audible effect length.

The entire processing is designed in a way, that allows dynamic speed changes rather than so-called 'halftime' speed effects with a fixed ratio. Side note: Some continuous time modification effects like chorusses will use the same principle but with much smaller circular buffers and some low frequency oscillation components.

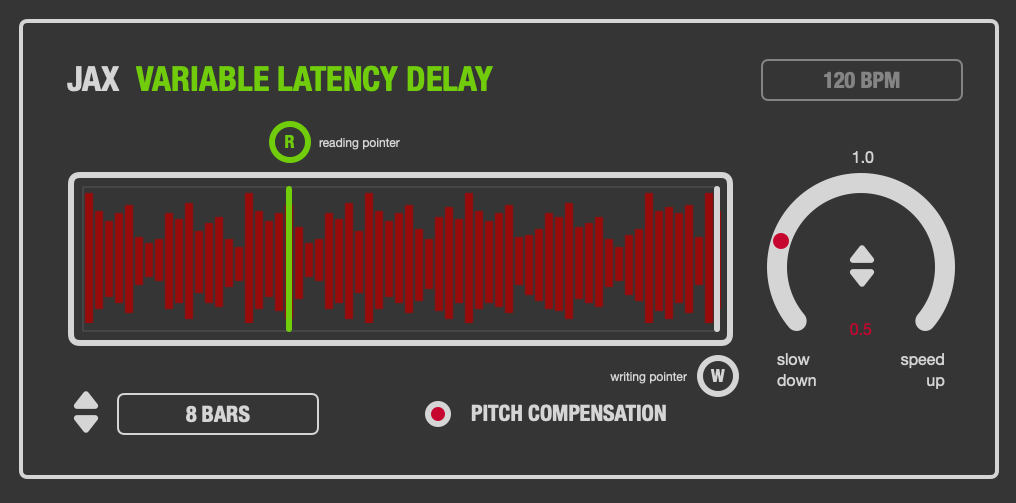

Speeding down and up audio will naturally and additionally result in pitch changes. This is very natural. It can be compensated with proportionally connected pitch shifters of any kind, which actually will virtually compensate the "wrong" pitch if applied in inverse direction.

This way, a constant pitch can be emulated. The sonic effect is then the impression of a tempo change without audible pitching effects, a result, which is impossible in nature, because it actually would require to modify the TIME directly, which up to today is just impossible to do inside the real world.

However, it is easily possible in the digital domain and with other special artificial concepts, used in movies for instance (i.e. slow motion) . These methods all have something in common. They attempt to trick the TIME in the manner of creating an illusion.